As artificial intelligence (AI) becomes more integrated into business tools, understanding the concepts of “grounding” and “hallucinations” is helpful for ensuring accurate and reliable AI outputs.

These terms highlight some of the biggest challenges in AI today: the ability of an AI model to stay connected to real-world knowledge (grounding) and its tendency to produce false or misleading information (hallucinations).

In this article, we’ll explore what is grounding and hallucinations in AI, how they impact enterprise search, and what you should look for to prevent these issues.

What is grounding in AI?

Grounding in AI refers to the process by which an AI system connects its outputs to real-world knowledge and relevant data. In simple terms, grounding ensures that when an AI model generates information, it’s based on factual, current, and verifiable data sources.

For AI systems like large language models (LLMs), which learn from massive datasets, maintaining proper grounding is essential to ensure that the information they produce aligns with reality.

Without grounding, AI models can stray into creating outputs that have no basis in actual facts—this is where hallucinations can occur.

What are hallucinations in AI?

AI hallucinations refer to the generation of outputs that appear coherent and relevant but are factually incorrect, misleading, or nonsensical. Hallucination in AI often stems from the model’s training data, which may be outdated, incomplete, or misinterpreted during the model’s learning process.

Hallucinations can be a particular issue with large language models because, although these models excel at generating human-like text, they do not have a built-in understanding of real-world data. The AI model generates information based on patterns it has seen in its training data, but without proper grounding, it may create plausible-sounding content that isn’t rooted in real-world knowledge.

For IT and engineering professionals deploying AI systems, including those involved in enterprise search or data management, understanding how and when AI hallucinations occur is key to building trustworthy solutions.

Examples of hallucinations in AI

Below are some common examples of hallucinations or outputs that demonstrate a lack of grounding in AI:

Example 1:

- User’s request: “Give me a list of academic papers that discuss the impact of AI on climate change.”

- AI response: “Here are some papers: ‘The Effects of AI on Climate Patterns’ by Dr. Maria Johnson, published in Nature Journal in 2020.”

- Hallucination: The paper mentioned does not exist, nor does the author, but the output seems plausible due to the coherent structure and familiar format.

Example 2:

- User’s request: “Tell me about the first artificial intelligence robot created by Tesla.”

- AI response: “Tesla’s first AI-powered robot, ‘Optimus Probot,’ was launched in 2018 and could solve complex engineering tasks autonomously.”

- Hallucination: Tesla has no history of creating an AI robot named ‘Optimus Probot,’ and no such launch happened in 2018. The response is purely a fabrication, despite its believable tone.

Example 3:

- User’s search query: “Find the latest policy document on remote work policies for 2024.”

- AI output: “The 2024 remote work policy was updated in January 2024 to include mandatory two days of in-office work per week.”

- Hallucination: In reality, the policy had not been updated for 2024, but the AI model, lacking proper grounding, invented an update.

Such hallucinations in enterprise search can mislead employees, creating confusion and even disrupting workflows when they rely on inaccurate or nonexistent information.

The role of grounding in preventing hallucinations

Preventing hallucinations largely depends on ensuring that AI systems have proper grounding. There are several ways to reinforce this grounding:

- Data quality: Training the AI on accurate, up-to-date, and real-world data sources reduces the chances of hallucinations.

- Retrieval-augmented generation (RAG): This technique combines the power of retrieval systems with generation models. By retrieving relevant data from knowledge databases, an AI model can generate outputs based on real-world information rather than relying solely on its training data.

- AI monitoring and fine-tuning: Regularly updating and fine-tuning AI models with current data helps prevent hallucinations and ensures that the AI system continues to reflect relevant information.

In practice, preventing hallucinations means building systems that check AI outputs against real-world data sources and continuously updating models to reflect new information.

How grounding & hallucinations impact enterprise search

AI-powered enterprise search solutions promise to revolutionize how companies retrieve and manage their vast amounts of data. However, the effectiveness of these solutions depends on their ability to produce accurate, grounded results.

If an AI-powered search tool generates outputs based on hallucinations, it can mislead employees, slow down decision-making, and create inefficiencies in the workplace.

Here are some key areas where grounding and hallucinations can affect enterprise search:

- Accuracy of search results: If the AI model used in enterprise search is not properly grounded in real-world knowledge, it may return irrelevant or incorrect search results. Employees might waste valuable time sifting through unreliable information.

- Data integration: Enterprise search systems often pull information from multiple sources. If the AI doesn’t correctly integrate data from these sources, hallucinations can occur, resulting in search results that seem relevant but don’t reflect the true data available.

- Trust in AI systems: Hallucinations can erode trust in AI-powered tools. If employees frequently encounter irrelevant or inaccurate search results, they may be hesitant to rely on AI solutions for critical tasks.

What to look for in AI-powered enterprise search

When evaluating AI-powered enterprise search solutions, you should focus on the following aspects to avoid hallucinations and ensure proper grounding:

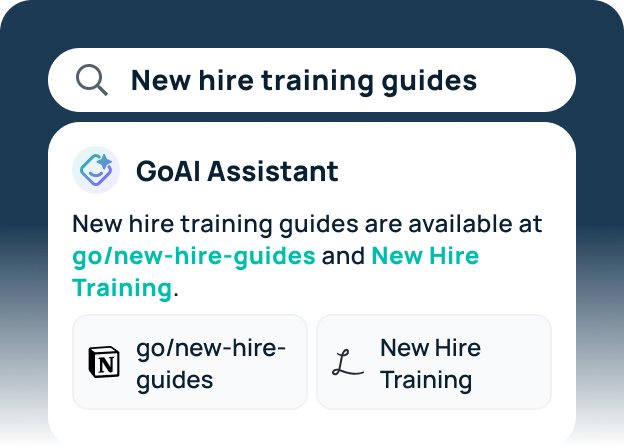

- Real-time data integration: Look for systems like GoSearch that integrate real-world data in real time, ensuring that search results are always current and relevant.

- Quality of data sources: Ensure that the AI system uses verified and high-quality data sources to inform its outputs, reducing the risk of hallucinations.

- Retrieval-augmented generation (RAG): Systems that incorporate RAG are less likely to hallucinate because they generate outputs based on relevant data rather than relying solely on a pre-trained model.

- Regular AI model updates: Check that the AI system is designed to be updated frequently with new data, keeping its outputs grounded in the most recent information.

Improve workplace knowledge management with GoSearch

Grounding and hallucinations are critical considerations for any AI-powered system, especially in enterprise environments where accurate information is essential.

GoSearch, an advanced AI-powered enterprise search tool, ensures that your organization’s knowledge management is both efficient and reliable. With features like real-time data integration and retrieval-augmented generation, GoSearch helps prevent hallucinations and provides accurate, relevant information based on real-world data.

Learn more about improving employee productivity and data accuracy with GoSearch – schedule a demo.

Search across all your apps for instant AI answers with GoSearch

Schedule a demo